With the Winter School in GenAI for Academia coming to an end, we got to explore how Nebula behaved under continuous stress from a larger number of users, and the results are more than satisfying.

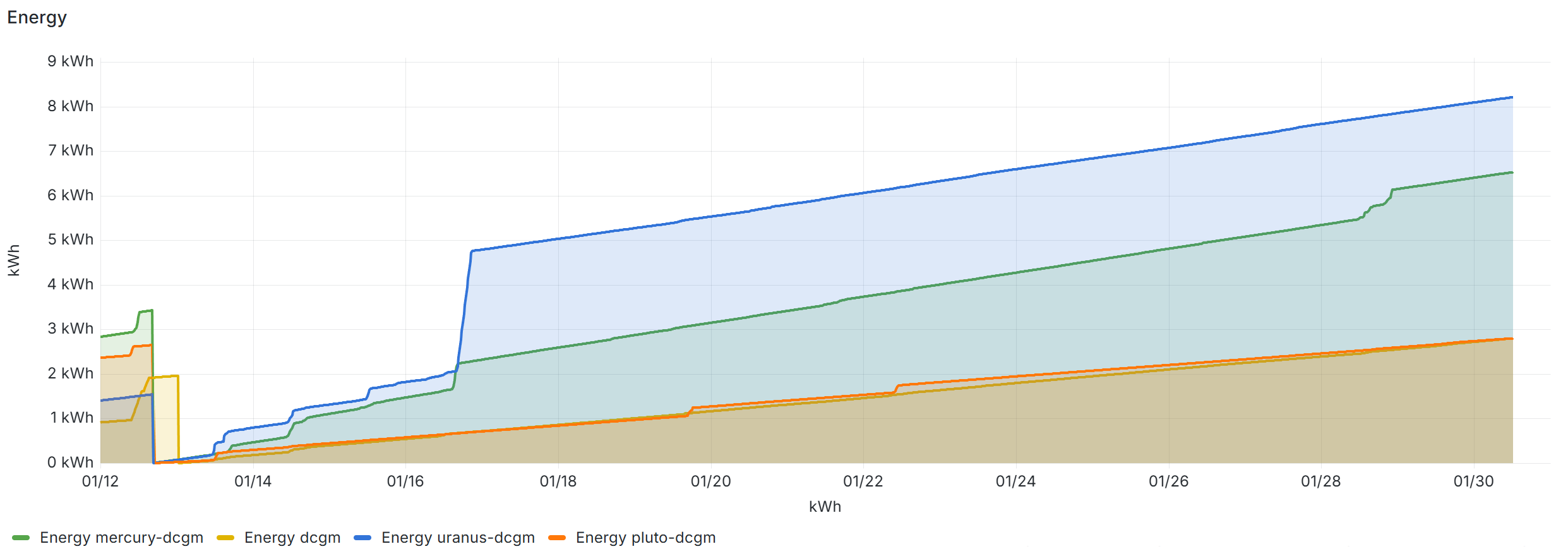

As an added bonus for all of us, and to showcase the power of running AI inference locally, here are a few aspects of Nebula that we recorded since the last update. From the start of the Winter School (Jan 12), when the last upgrades were made to the platform and until now (Jan 30), together, we used:

– 42 600 010 tokens

– 10 165 different prompts

– 30 kWh (put more plainly, it’s like using an electric oven for 10 hours, or running a fridge for a month)