Multiplayer VR platform expands research-ready social interaction data collection

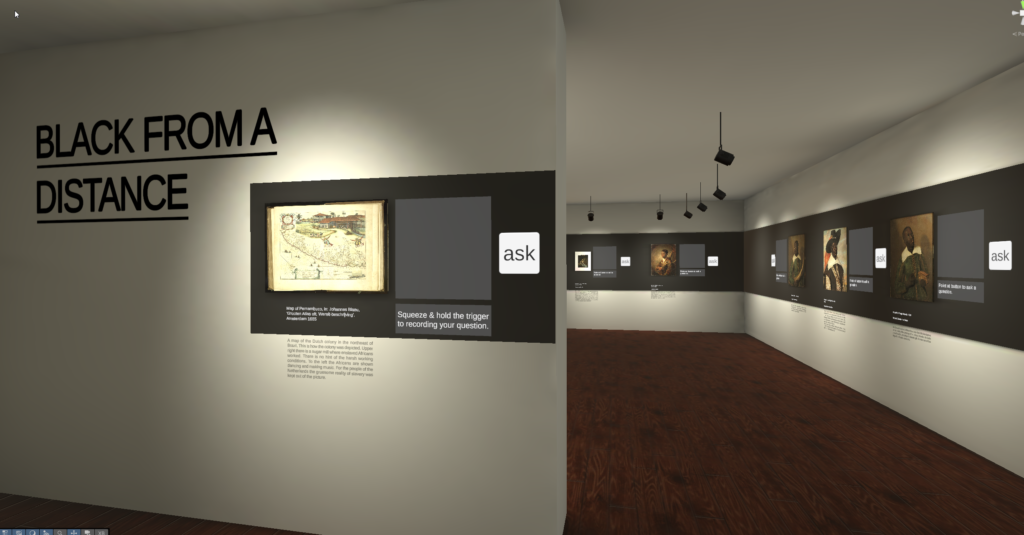

Many core research questions in Hybrid Intelligence, such as fairness, collaboration, and adaptive support, play out in group interaction. Yet physical-world studies in museums and public spaces are difficult to instrument and control, making it hard to systematically compare interaction designs or validate AI interventions. They also limit what we can capture beyond observation and self-report. A VR Museum (VRM) addresses this gap by providing a controlled, repeatable environment where exhibition content and interaction design can be varied systematically while collecting rich interaction and behavioral traces.

By extending the VRM to a multiplayer setting, the platform enables studies of co-visiting behavior, including joint attention, conversational dynamics, coordination, and inclusion. With synchronized multimodal logging (e.g., gaze, pose, position, and voice), social VR sessions become analyzable datasets, supporting rapid prototyping, small-scale user studies, and future work on social interaction design and the impact of AI guidance on group dynamics.

The Tech Labs of the Network Institute have been developing additional functionality and upgrading existing once for the past two years. This latest incarnation involved adding networking to facilitate multiplayer access, realistic avatar bodies that are animated based on head and hands tracking information and synchronisation of the avatar’s movement across the network.

The Tech Labs of the Network Institute have been developing additional functionality and upgrading existing once for the past two years. This latest incarnation involved adding networking to facilitate multiplayer access, realistic avatar bodies that are animated based on head and hands tracking information and synchronisation of the avatar’s movement across the network.

Many VR application (eg video games) often only use virtual hands that enable the player to interact with the virtual environment. Having an entire virtual humanoid body that is linked to the tracking data coming from the VR headset and handheld controllers requires a complex set of calculations that also take relative movement of the entire bone structure into account (Inverse Kinematics). Software to do this is not easy to find and often harder to implement. We choose the BNG Framework as that offers a wide chose of interaction, smooth animation and relative easy setup.

The biggest hurdle turned out to be the synchronisation of the avatar’s movement across the network so all users will see all the other avatars as well. Apparently, this feature is not something used in game development using the industry standard platforms like Unity Pro. We found an experimental tool on GitHub that uses a special networking add-on (***Mirror). Changing this to ‘easily’ accept new avatars through code was a tough nut to crack and setting up new avatars for the VRM remains a cumbersome process. But it works!

The VRM logs information about eye gaze (where is the user looking), eye and lip expressions (information about eye, eye lids, mouth and jaw), tracking data, recorded audio (user speech) and transcribed audio (user speech). All using a network timer that allow easy synchronisation of the collected data off-line.

The VRM logs information about eye gaze (where is the user looking), eye and lip expressions (information about eye, eye lids, mouth and jaw), tracking data, recorded audio (user speech) and transcribed audio (user speech). All using a network timer that allow easy synchronisation of the collected data off-line.

This work is funded by the Hybrid Intelligence Centre.